Vision-based Tactile Sensing

Background

Compared to the human sense of touch, robotic tactile sensing systems are considerably less capable with regard to both functionalities and flexibility. As opposed to visual sensing, where cameras are widely used for their high resolution and relatively small size and low cost, tactile sensing lacks the same sort of comprehensive solution. However, the dramatic progress that computer vision has experienced in the last decade, in great part due to the overlap with machine learning, opens the possibility of using cameras to sense the deformation that soft materials undergo when subject to force.

Research on Tactile Sensing

In the context of this project, a tactile sensing technique has been developed that aims to provide robots with the sense of touch, which is crucial for many applications such as grasping or holding objects. This technique learns from data how to accurately predict the distribution of the forces exerted by an object in contact with the sensing surface.

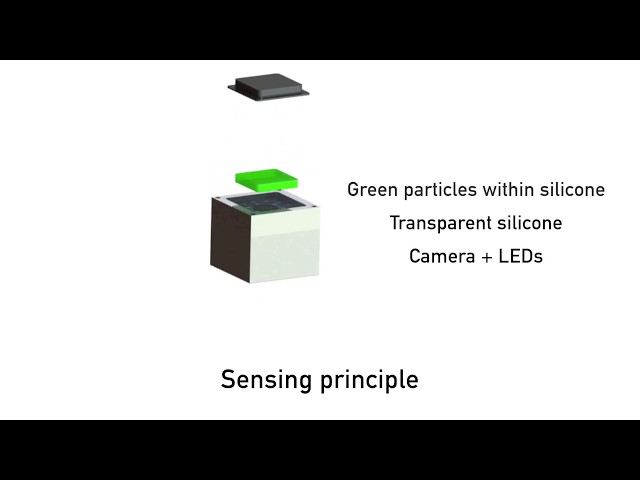

The sensor is entirely built from very simple, low-cost components. It is based on a standard camera placed below a soft material, which contains a random spread of tiny plastic particles. When a force is applied to its surface, the material deforms and causes the particles' motion, which is captured by the camera. The image patterns created by the moving particles are processed to extract information about the forces causing the material deformation. By densely embedding the particles into the material, the sensor shows an extremely high resolution. The data-driven approach employed to solve this task aims to overcome the complexity of modeling contact with soft materials and estimate the distribution of the applied forces with high accuracy.

- Camill Trueeb, Carmelo Sferrazza and Raffaello D'Andrea. Towards vision-based robotic skins: a data-driven, multi-camera tactile sensor. Proceedings of the International Conference on Soft Robotics (to appear), New Haven, 2020. external page link

- Carmelo Sferrazza, Adam Wahlsten, Camill Trueeb and Raffaello D'Andrea. Ground Truth Force Distribution for Learning-Based Tactile Sensing: A Finite Element Approach. IEEE Access, vol. 7, pp. 173438-173449, 2019. external page link

- Carmelo Sferrazza and Raffaello D'Andrea. Transfer learning for vision-based tactile sensing. Proceedings of the International Conference on Intelligent Robots and Systems, Macau, 2019. external page link

- Carmelo Sferrazza and Raffaello D'Andrea. Design, Motivation and Evaluation of a Full-Resolution Optical Tactile Sensor. Sensors, 19(4):928, 2019. external page link